As AI adoption accelerates, it is becoming deeply integrated into business operations. However, this introduces novel risks that must be proactively managed by aligning them with the organization's risk appetite.

Risk management in AI involves a continuous cycle (RML) of identifying, analyzing, responding to, and monitoring threats.

If you are studying for the Advanced in AI Security Management (AISM) certification, you must distinguish between threats that happen during training vs. inference.

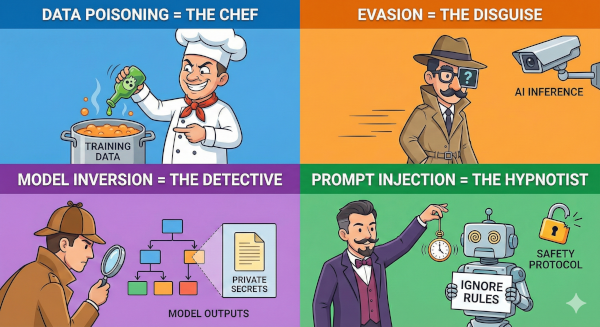

Use this "Analogy Guide" to remember the core attacks:

Happens during the "Rehearsal" (training). Like a chef adding poison to the ingredients before the meal is cooked, the attacker injects bad data before the model is built.

Happens during "Showtime" (inference). Like a criminal wearing fake glasses to fool a camera, the attacker alters inputs in real-time to deceive the model.

A privacy attack. The attacker analyzes outputs to work backward and reconstruct sensitive training data—figuring out the secrets hidden inside.

Social engineering for AI. The attacker crafts words to trick the model into ignoring its safety guidelines and leaking data.

To future-proof your organization, you should be familiar with the two primary frameworks guiding risk management today.

A flexible, voluntary guide designed to help organizations manage AI risks and ensure trustworthy systems across various sectors.

The world's first comprehensive legal framework. It categorizes systems by risk level and imposes strict compliance requirements that are mandatory by law.